Chinese Breakthrough DeepSeek-OCR — What’s True, What’s the Hype?

How a 3-Billion-Parameter Open-Source Model from China Is Redefining Efficiency, Compression, and the Future Economics of Artificial Intelligence.

Author credentials:

KBS Sidhu, IAS (retd), former Chief Secretary, Punjab, analyses China’s leading AI firm’s open-source OCR model

DeepSeek-OCR — Is it a Big Deal?

The Headline Claim

On 20 October 2025, the Chinese artificial intelligence company DeepSeek released DeepSeek-OCR, an open-source 3-billion-parameter vision–language model designed for optical character recognition (OCR) and document compression. It is available under the MIT licence on GitHub and Hugging Face. In essence, DeepSeek-OCR compresses lengthy text documents into compact visual tokens, enabling large language models (LLMs) to process far longer inputs using fewer computational resources. The launch immediately drew global attention because it promised to break one of AI’s hardest constraints — the high cost of memory and context length.

What the Model Actually Is

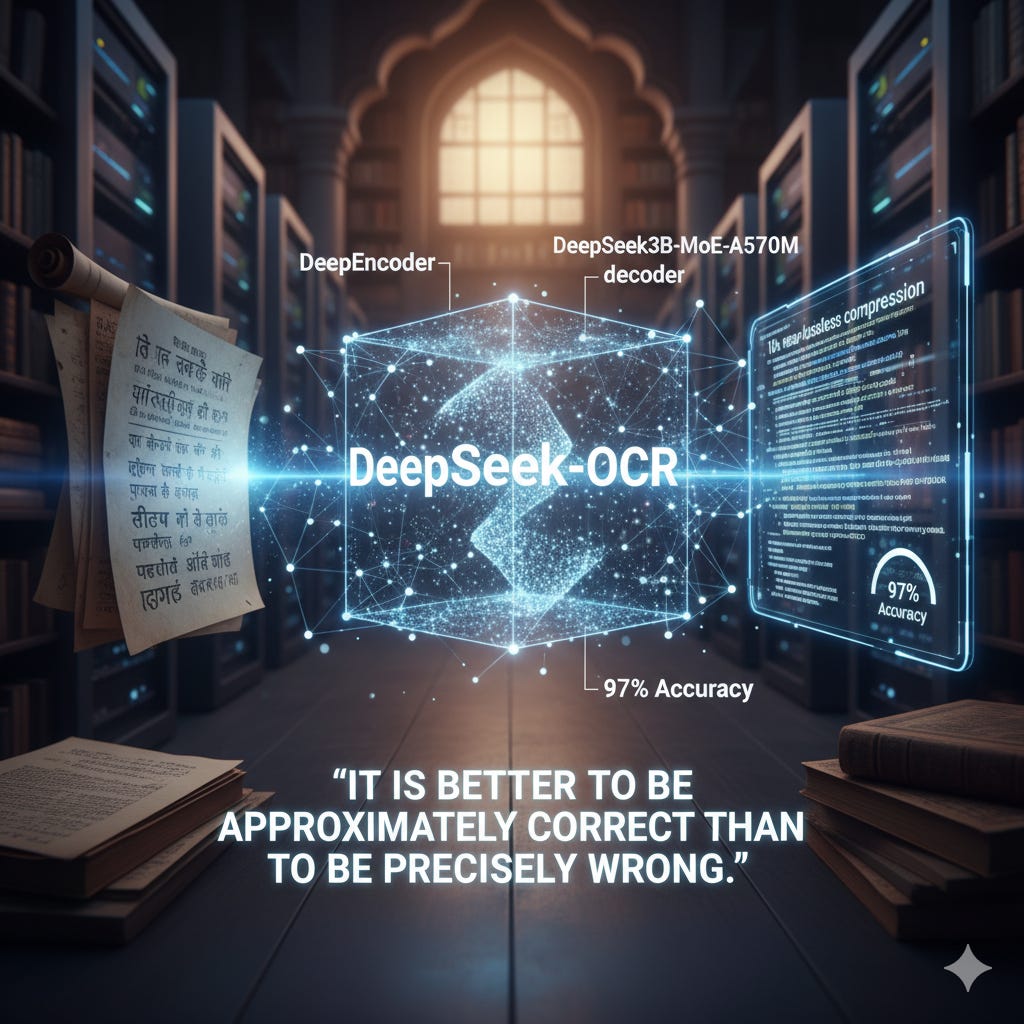

DeepSeek-OCR is a two-part system. The first component, the DeepEncoder, is a 380-million-parameter vision encoder that converts a document image into a small number of “vision tokens.” The second, the DeepSeek3B-MoE-A570M decoder, reads these vision tokens and reconstructs text. While the system carries a notional 3 billion parameters, only around 570 million are activated during inference because of its Mixture-of-Experts (MoE) architecture. This means that the model combines the strength of a large network with the efficiency of a smaller one.

This design choice is central to DeepSeek’s philosophy: smarter allocation of compute rather than brute-force scaling. It stands as a reminder that efficiency in architecture can often outpace sheer parameter count in delivering real-world performance.

The “Ten-Fold Lossless Compression” Debate

DeepSeek’s publicity materials claim that the model achieves “up to 10× lossless text compression.” Technically, this means a document can be reduced to one-tenth its normal token length without noticeable loss in information. However, “lossless” is a slight exaggeration. Independent evaluations and the model’s own benchmarks show about 97 percent decoding accuracy at tenfold compression — an impressive figure but not perfect. The correct phrase would be near-lossless.

The takeaway is that DeepSeek-OCR can dramatically shrink input size while keeping accuracy high. A tenfold compression with such minimal degradation is unprecedented in open-source OCR technology and could reshape how we handle long documents and data-heavy records.

Real-World Efficiency

Where DeepSeek-OCR truly shines is in efficiency. The model can process over 200,000 pages per day on a single A100-40GB GPU, according to performance demonstrations. Competing models often require multiple GPUs to reach similar throughput. DeepSeek-OCR also uses far fewer tokens than earlier systems: about 100 vision tokens can outperform GOT-OCR2.0’s 256 tokens, and fewer than 800 tokens can exceed MinerU2.0’s 6,000+ tokens per page.

The company also claims the system cuts GPU costs by roughly one-fifth, although this precise fraction has not been independently confirmed. Still, the direction of change is beyond dispute — a dramatic reduction in cost per page and per token.

The Team Behind the Model

DeepSeek’s press materials and several newsletters stated that DeepSeek-OCR was created by “three former GOT-OCR2.0 researchers.” This is only partially true. The lead author, Haoran Wei, indeed worked on both the GOT-OCR2.0 and DeepSeek-OCR projects. The other two listed authors, Yaofeng Sun and Yukun Li, are not on the GOT-OCR2.0 author list. The overlap, therefore, is partial. It illustrates how easy it is for corporate communication to blur nuance — one researcher’s continuity becomes “three former authors” in headlines.

The Core Innovation: Optical Mapping

DeepSeek-OCR’s defining feature is its “optical mapping” technique, sometimes described as contextual optical compression. Instead of feeding raw text into an LLM, the system converts the entire document into an image, encodes it into a set of vision tokens, and then decodes those tokens back into text. Each vision token captures significantly more contextual information than a single text token could.

This approach turns the conventional pipeline upside down: rather than asking text models to expand their context windows indefinitely, it compresses information visually before feeding it into text decoders. It’s a reversal of logic — a step towards “teaching models to see text” instead of just reading it.

Strengths, Limitations, and Lessons in Precision

DeepSeek-OCR’s performance on benchmarks and real-world tests is undeniably impressive. It provides an open-source, efficient alternative to the large proprietary OCR systems that dominate enterprise AI. Yet, two phrases in its promotion require careful interpretation:

“3B parameters” — accurate in total, but only 570M are active during inference.

“Lossless compression” — better read as near-lossless with ~97% accuracy at 10× compression.

It’s a timely reminder of a favourite saying among economists and engineers alike:

“It is better to be approximately correct than to be precisely wrong.”

This distinction matters in AI discourse, where exaggeration often drowns nuance. DeepSeek’s achievement is not diminished by clarification — in fact, understanding these details enhances its credibility.

Why It Matters — And What India Can Learn

DeepSeek-OCR signals a profound shift in how artificial intelligence might handle long documents and large-scale digitisation. For India, where millions of pages of government files, court records, and historical archives await digital transformation, this model offers a possible breakthrough.

Instead of relying on massive, expensive computing clusters, systems like DeepSeek-OCR demonstrate that clever design can substitute for brute compute power. This insight should guide India’s AI strategy — especially in e-governance, education, and multilingual OCR. Imagine compressing and decoding vast collections of land records, parliamentary debates, or old regional newspapers written in Devanagari, Gurmukhi, or Tamil, using affordable hardware in district-level data centres.

The lesson is straightforward: innovation need not depend on endless scaling or billion-dollar compute budgets. By adopting efficient architectures, supporting open-source research, and building Indic-language benchmarks for models like DeepSeek-OCR, India could leapfrog into a new phase of cost-effective AI deployment.

The Bottom Line

DeepSeek-OCR is not hype — it’s a genuine technical advance with real-world implications. The model exists, it’s open-source, and its token efficiency and throughput outperform many predecessors. Some of its claims — particularly about the research team and “lossless” compression — need qualification, but these are matters of emphasis, not deception.

The broader message, however, extends beyond DeepSeek. The release shows that brute compute power is not always the answer to practical AI problems. The future lies in smarter architectures, context compression, and a disciplined approach to accuracy — one that values being “approximately correct” far more than being “precisely wrong.”